AI Readiness Assessment Quiz

Answer each question using the scale below to evaluate your organization's readiness to adopt and scale AI. When you finish, click Calculate Score to see your AI readiness level and receive next‑step recommendations.

- Do you have clear AI goals tied to business outcomes?

- Is your data centralized, governed and of high quality?

- Do you have scalable infrastructure to deploy and monitor AI models (e.g., cloud, MLOps)?

- Are your teams trained in AI skills and working collaboratively across departments?

- Do you have formal AI governance and compliance processes in place?

- Is sensitive data protected in AI workflows, and do you monitor your AI systems for risks?

- Are AI initiatives integrated into real workflows with user buy‑in and training plans?

- Do you have a prioritized AI use‑case matrix based on impact and feasibility?

- Are you able to deploy and monitor AI models in production (not just prototypes)?

- Do stakeholders understand the benefits and limitations of AI and support adoption?

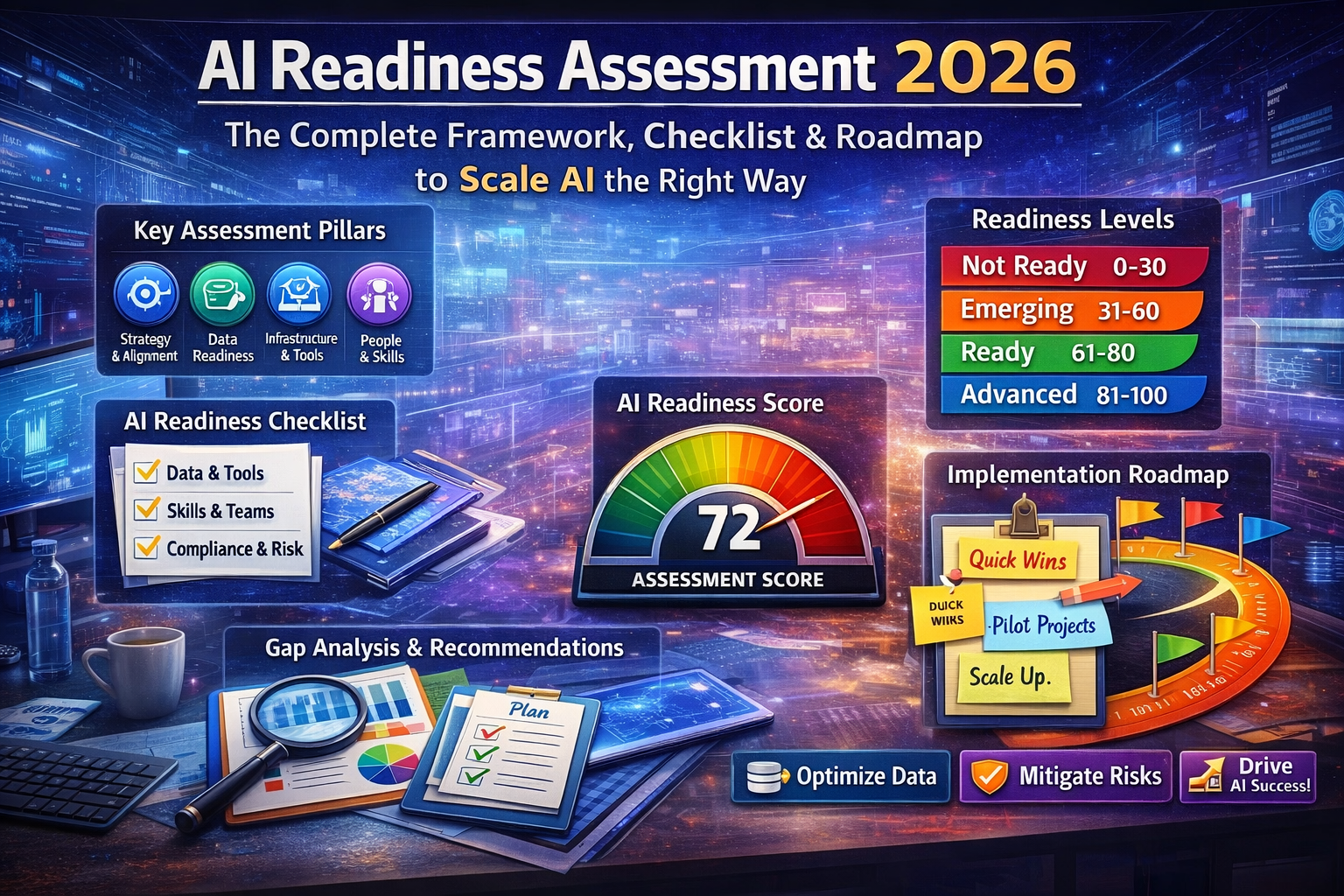

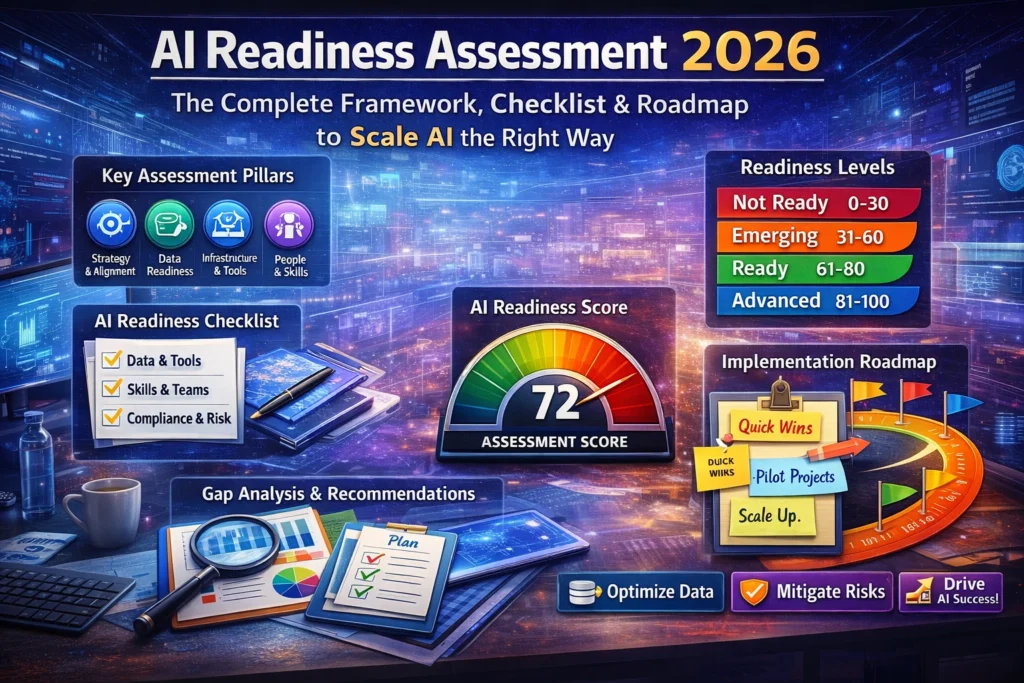

AI Readiness Assessment 2026: The Complete Framework, Checklist & Roadmap to Scale AI the Right Way

AI is everywhere right now — in pitch decks, product roadmaps, investor calls, and every “future of business” LinkedIn post you scroll past. But here’s the uncomfortable truth most teams discover after they’ve spent months experimenting:

Buying AI tools doesn’t mean you’re ready for AI.

And running one chatbot pilot doesn’t mean your business can scale AI safely, profitably, and sustainably. That’s exactly where an AI readiness assessment comes in.

It’s the step that separates:

• companies that successfully operationalize AI from

• companies that burn budget, trigger compliance issues, frustrate teams, and quietly “pause” AI initiatives.

In this guide, you’ll learn what an AI readiness assessment really is, what it measures, how to score readiness, and how to create a practical roadmap that supports modern AI adoption — including generative AI, automation, predictive analytics, and enterprise-scale machine learning.

You’ll also get a complete checklist you can use today, plus templates and “what to do next” strategies depending on your score.

Let’s make this simple, realistic, and actionable — without hype.

What Is an AI Readiness Assessment? (Definition)

An AI readiness assessment is a structured evaluation that determines whether an organization has the data, infrastructure, skills, governance, and operational maturity needed to adopt AI effectively.

In plain terms:

It answers:

“Can we implement AI without breaking things, wasting money, or creating risk?”

- Whether your teams are prepared to build and maintain AI systems

- Whether your organization has the right governance and security structure

- Which AI use cases are realistic right now

- What needs to be fixed before scaling AI

Think of it like a “pre-flight check” for AI.

Because AI is not just a technology shift — it’s an organizational change.

Why AI Readiness Matters More Than Ever

Many organizations jump straight into AI adoption for one reason:

Pressure.

Competitors are adopting AI. Customers expect faster service. Leadership wants innovation. Teams want automation.

But organizations that skip readiness often run into painful, predictable obstacles:

Common AI Adoption Breakdowns

- AI outputs are unreliable because data quality is poor

- Teams don’t trust AI results because models are unexplainable

- Costs spiral due to inefficient infrastructure and tool sprawl

- AI initiatives fail to gain adoption because workflows aren’t aligned

- Legal/compliance issues emerge because governance wasn’t built early

- Small pilots succeed but scaling fails because there’s no operating model

The point isn’t to slow down AI.

The point is to avoid “AI theatre” — projects that look exciting but don’t deliver durable value.

A readiness assessment gives you:

- Clarity

- Prioritization

- Risk reduction

- A roadmap that gets buy-in from leadership and teams

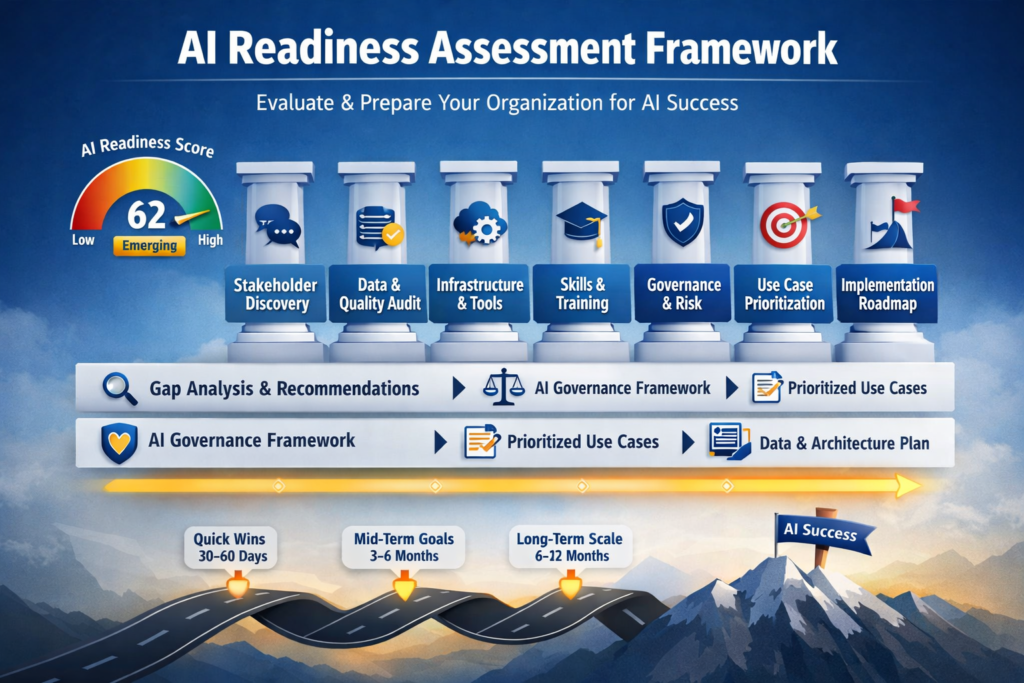

What an AI Readiness Assessment Evaluates ( Core Pillars)

A strong AI readiness assessment typically evaluates 7 pillars.

Together, they determine whether your business can adopt AI responsibly and at scale.The 7 Pillars of AI Readiness

- Strategy & Business Alignment

- Data Readiness

- Infrastructure & Tooling

- People & Skills

- Governance, Compliance & Risk

- Security & Responsible AI

- Change Management & Adoption

Let’s break down each pillar — including what “ready” looks like, what “not ready” looks like, and what you can do to improve quickly.

1) Strategy & Business Alignment This pillar asks one essential question: Do we know why we’re using AI — and what success means? AI projects fail not because models are bad, but because:

- Use cases aren’t prioritized

- Outcomes aren’t defined

- AI doesn’t align with business workflows

- Teams build AI because it’s trendy, not because it solves a business problem

Signs You’re Ready

- You have clear AI goals tied to business outcomes (revenue, efficiency, CX, risk)

- You’ve identified specific use cases with measurable KPIs

- Leadership supports AI adoption with budget and operational ownership

- You’ve prioritized use cases based on feasibility + impact

Signs You’re Not Ready

- You’re collecting AI tools without a strategy

- AI is treated as an “IT project,” not a business transformation

- KPIs are vague (“increase innovation,” “be more automated”)

- Different departments are experimenting in silos

What to Do Next

- Build a use-case prioritization matrix

- Define 3–5 measurable AI KPIs

- Identify executive sponsorship + operational owner

- Align AI initiatives to workflows, not features

2) Data Readiness (The #1 Predictor of AI Success) If strategy is the “why,” data readiness is the “fuel.” Your AI is only as good as the data feeding it. Even the best model will fail if:

- Data is incomplete

- Data isn’t accessible

- Data lacks consistency

- Data isn’t governed

- Data quality is low

What Data Readiness Covers

- Data availability across systems

- Data quality, completeness, and freshness

- Standardized data definitions and ownership

- Access rights and governance

- Integration between data sources

- Labeling and metadata (especially for ML and GenAI retrieval)

Signs You’re Ready

- Data is centralized or well-connected across systems

- You know where your core data lives and who owns it

- You have documentation and consistent definitions

- Data quality is monitored and improving

- You can access datasets quickly for analytics and modeling

Signs You’re Not Ready

- Data is scattered across silos (tools, teams, vendors)

- Teams don’t trust data accuracy

- You rely on spreadsheets, manual exports, or disconnected CRMs

- There are no data owners or governance policies

- You can’t build clean datasets without weeks of effort

What to Do Next

- Start with a data audit (sources, quality, ownership)

- Create “single source of truth” tables for key data

- Define a data governance model

- Invest in integration (APIs, pipelines, ETL/ELT)

- Build “AI-friendly” datasets for priority use cases

3) Infrastructure & Tooling

This pillar asks:

Do we have the technical foundation to build, deploy, monitor, and scale AI?

AI infrastructure isn’t just cloud servers. It includes:

- Storage and compute

- Pipelines

- Analytics platforms

- MLOps tooling

- LLM workflows (RAG, vector databases, prompt orchestration)

- Monitoring + reliability

Signs You’re Ready

- You have scalable compute (cloud or hybrid)

- Data pipelines and analytics platforms exist

- You can deploy models into production, not just notebooks

- You have MLOps or deployment practices

- You have monitoring, rollback, and version control processes

Signs You’re Not Ready

- AI experiments happen only in isolated environments

- There’s no deployment process beyond “send file to IT”

- Tool sprawl exists (random SaaS tools, scattered licenses)

- Infrastructure costs are unpredictable

- Security controls for AI environments are missing

What to Do Next

- Establish an AI architecture baseline

- Standardize tooling for data + experimentation

- Implement MLOps practices (CI/CD for models)

- Define a GenAI stack if using LLMs (RAG, vector DB, logging)

- Plan infrastructure based on prioritized use cases

4) People & Skills AI doesn’t fail because your team isn’t smart. It fails because AI work requires:

- Cross-functional collaboration

- New skills and roles

- Shared literacy across departments

- Operational ownership (not just “data science team builds it”)

Skills AI Adoption Usually Requires

- Data engineering

- ML engineering

- Product + UX for AI experiences

- Legal/compliance understanding

- Business analytics literacy

- Prompt engineering + AI workflow design (for GenAI)

- Model monitoring and evaluation

Signs You’re Ready

- You have internal AI champions or teams

- You can define AI requirements without confusion

- Teams understand AI limitations and risks

- Roles and responsibilities are clear

- You have a plan for training/upskilling

Signs You’re Not Ready

- AI is limited to one person or one team

- Stakeholders don’t know what AI can or cannot do

- Teams fear AI will replace them, so adoption becomes political

- There’s no staffing plan for AI initiatives

What to Do Next

- Create an AI literacy program for leadership + teams

- Define AI roles: owner, builder, validator, approver

- Hire or train for missing roles

- Build a cross-functional AI steering group

- Encourage collaboration between domain experts and AI teams

5) Governance, Compliance & Risk This pillar is where many organizations get surprised — especially after launching GenAI tools. Governance is not bureaucracy. It’s how you ensure AI is:

- Fair

- Compliant

- Traceable

- Safe

- Aligned with your organization’s values and legal requirements

Governance Covers

- Model approval and audit trails

- Data privacy compliance

- Transparency and explainability

- Responsible AI policy

- Vendor and third-party AI governance

- Documentation and reporting

Signs You’re Ready

- You have AI policies and compliance review processes

- You document datasets, models, and decision logic

- You can explain AI decisions where required

- You have risk scoring for AI use cases

- You have a governance body (even small) overseeing AI programs

Signs You’re Not Ready

- Teams use AI tools without oversight

- No documentation exists for models or prompts

- Compliance is “someone else’s problem”

- You cannot explain outputs to customers, auditors, or regulators

- Risk is discovered after deployment

What to Do Next

- Create an AI governance framework (lightweight but real)

- Start documentation from day 1

- Implement model evaluation policies (bias, fairness, accuracy)

- Align AI strategy with industry regulations

- Create a repeatable review process for AI deployments

6) Security & Responsible AI AI introduces new security risks:

- Data leakage

- Prompt injection

- Model inversion

- Insecure integrations

- Misuse

- Supplier/vendor vulnerabilities

Security also includes responsible AI practices:

- Safe output controls

- Human-in-the-loop checks

- Access permissions

- Monitoring for abuse

Signs You’re Ready

- You restrict access to sensitive data in AI workflows

- You have secure AI environments and logging

- You evaluate AI tools/vendors before adoption

- You use red-teaming or testing for GenAI risks

- You have incident response plans for AI failures

Signs You’re Not Ready

- Teams paste sensitive data into public AI tools

- No logging or monitoring exists

- AI systems can be manipulated through inputs

- Vendor risks aren’t reviewed

- No policy exists for AI usage

What to Do Next

- Establish secure AI usage guidelines

- Implement logging and monitoring for GenAI workflows

- Limit data access with role-based controls

- Conduct AI security testing (including prompt injection testing)

- Define incident response processes specifically for AI tools

7) Change Management & Adoption (The Most Underrated Pillar)

Even if your AI is technically perfect…

If teams don’t adopt it, it fails.

AI readiness includes:

- Stakeholder alignment

- Communication plans

- Workflow integration

- Feedback loops

- Training and enablement

Signs You’re Ready

- AI is integrated into workflows, not layered on top

- Teams understand “how AI helps them”

- You have feedback loops and continuous improvement cycles

- Adoption is tracked and encouraged

Signs You’re Not Ready

- AI is forced onto teams without input

- People don’t trust the output

- AI tools feel like extra work

- There’s no support or training

- Early failures reduce long-term buy-in

What to Do Next

- Create a change management plan for AI projects

- Include users early (discovery interviews)

- Build explainability into UX

- Provide training and onboarding

- Track adoption metrics and iteratively improve

AI Readiness Checklist (Quick Self-Assessment)

Use this checklist to score your readiness across all pillars.

Strategy & Alignment

- We have defined business goals for AI

- We have prioritized use cases by impact + feasibility

- KPIs are measurable and tracked

- Ownership is clearly assigned

Data Readiness

- We know where our key datasets live

- Data is accessible, clean, and consistent

- Data has owners and governance policies

- Data integration exists across core systems

Infrastructure & Tooling

- We have scalable compute + storage

- We can deploy AI into production

- We have monitoring and version control

- Tooling is standardized (not chaotic)

People & Skills

- We have AI literacy across teams

- Roles are defined (builder, owner, reviewer)

- We have training or hiring plans

- Collaboration exists between domain + AI teams

Governance & Compliance

- We have AI policies or governance frameworks

- Models/prompts are documented

- Compliance is integrated into AI workflows

- AI risk scoring exists

Security & Responsible AI

- Sensitive data is protected

- AI environments are monitored

- Vendor risk is assessed

- We test for misuse and security vulnerabilities

Adoption & Change Management

- AI supports real workflows

- Teams trust and understand AI results

- Training + enablement exists

- Feedback loops exist and improvements are continuous

AI Readiness Scoring Model (0–100)

AI Readiness Scoring Model (0–100) Score each pillar 0–10. Add up total.

Readiness Levels

0–30: Not Ready

- Focus on data, governance, and foundational systems

- Avoid scaling AI until basics are fixed

31–60: Emerging

- Some foundations exist, but gaps will block scale

- Start with low-risk pilots while improving fundamentals

61–80: Ready

- You can run meaningful pilots and scale within months

- Build an AI operating model and governance to scale safely

81–100: Advanced

- You can scale AI across departments with confidence

- Optimize, innovate, and build competitive advantage

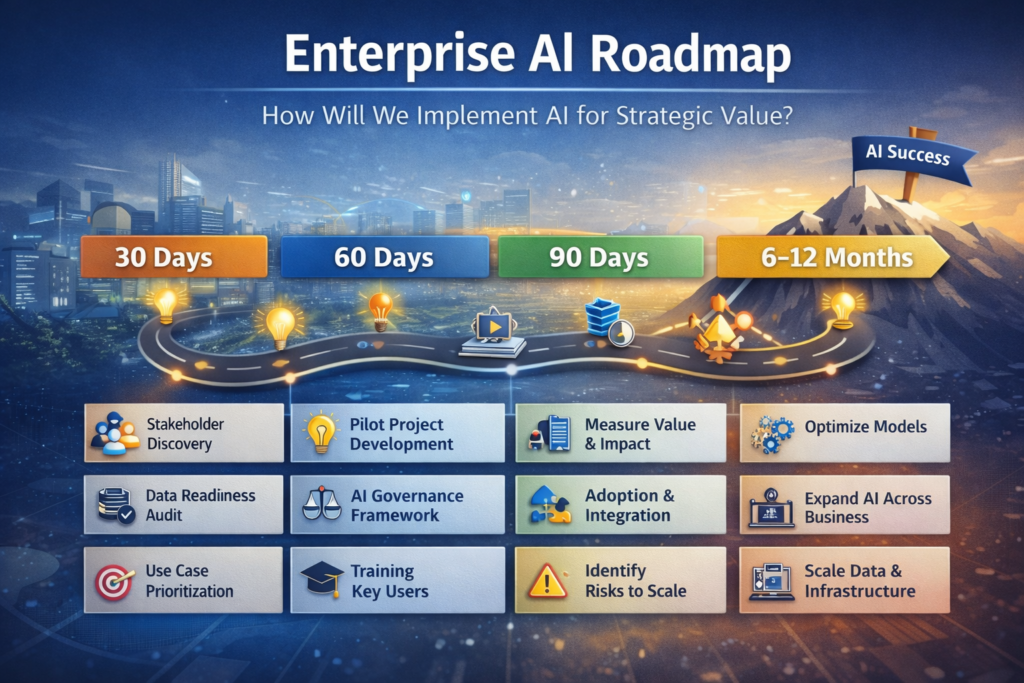

AI Readiness Assessment Process (Step-by-Step Roadmap)

AI Readiness Assessment Process (Step-by-Step Roadmap)

Here’s how a complete AI readiness assessment typically works.

Step 1: Stakeholder Discovery

Interview leaders and teams to learn:

- Business objectives

- Pain points

- Process bottlenecks

- Expectations and fears

- Current AI usage

Outcome: AI opportunities + adoption risks

Step 2: Data Audit

Evaluate:

- Data sources

- Data accessibility

- Quality, completeness, bias risks

- Governance and ownership

Outcome: Data readiness score + required improvements

Step 3: Infrastructure Review

Assess:

- Cloud maturity

- Tooling

- Deployment processes

- Scalability

- Monitoring capabilities

Outcome: AI architecture plan + tooling recommendations

Step 4: Skills & Org Readiness

Evaluate:

- Team structure

- Skill gaps

- Training needs

- Roles and responsibilities

Outcome: Staffing model + training roadmap

Step 5: Governance & Risk Assessment

Review:

- Compliance requirements

- Privacy risks

- Audit needs

- Responsible AI policies

- Vendor and tool risks

Outcome: Governance framework + risk register

Step 6: Use Case Prioritization

Rank use cases based on:

- ROI potential

- Feasibility

- Risk level

- Time to value

Outcome: Prioritized AI use case portfolio

Step 7: Implementation Roadmap

Build a roadmap with:

- Quick wins (30–60 days)

- Medium-term initiatives (3–6 months)

- Long-term scale projects (6–12 months)

Outcome: AI adoption roadmap + measurable KPIs

Common Mistakes in AI Adoption (And How to Avoid Them)

Common Mistakes in AI Adoption (And How to Avoid Them)

Even strong organizations make these mistakes:

- Starting With Tools Instead of Use Cases

Fix: Start with problems worth solving. - Underestimating Data Work

Fix: Treat data readiness as a core AI investment. - Ignoring Governance Until It’s Too Late

Fix: Build governance early — even lightweight. - No Adoption Strategy

Fix: Build AI into workflows and train users. - Scaling Too Fast

Fix: Scale only after pilots prove value and risks are managed.

AI Readiness vs Data Maturity vs Digital Transformation

These are related, but not the same.

AI Readiness

Can we build and scale AI safely, effectively, and profitably?

Data Maturity

Is our data reliable, governed, accessible, and useful?

Digital Transformation

Have we modernized systems, processes, and operations?

AI readiness depends heavily on data maturity and digital transformation — but it also includes governance, skills, and adoption.

What You Get From an AI Readiness Assessment (Deliverables)

A complete AI readiness assessment typically produces:

- AI Readiness Score (by pillar)

- Gap analysis + recommendations

- Prioritized AI use cases

- AI governance & responsible AI framework

- Risk register (compliance, security, operational risks)

- Data readiness and improvement plan

- AI architecture and tooling plan

- AI adoption roadmap (30/60/90 day + 6–12 months)

- Training + skills development plan

This makes AI adoption measurable, safe, and scalable.

FAQs: AI Readiness Assessment

How long does an AI readiness assessment take?

Typically 2–6 weeks, depending on organization size, data complexity, and stakeholder availability.

What industries benefit most from AI readiness assessments?

Any industry adopting AI at scale, especially:

- Finance

- Healthcare

- Manufacturing

- Ecommerce

- Logistics

- SaaS

- Customer support-heavy businesses

Do we need perfect data before using AI?

Not perfect — but you need enough data quality + governance for your use case.

For GenAI, you also need clean knowledge sources if you want reliable outputs using retrieval-based systems (RAG).

What’s the difference between AI readiness and AI strategy?

AI strategy defines goals and direction.

AI readiness measures whether you have the capabilities to execute the strategy.

Can small businesses do AI readiness assessments?

Yes. Even small teams benefit because it prevents costly tool mistakes and helps prioritize the most realistic AI projects.

What if our AI readiness score is low?

That’s normal. The assessment doesn’t “fail” you — it gives you a roadmap.

Most organizations start in “Emerging” readiness and improve quickly with targeted actions.

What’s the biggest reason AI adoption fails?

Misalignment between data, governance, and real workflows.

AI is often built in isolation and doesn’t integrate into how people actually work.

How often should we reassess AI readiness?

Every 6–12 months, or anytime you:

- Adopt major new tools

- Change systems

- Scale AI to new departments

- Face new compliance requirements

Conclusion: AI Readiness Is Your Competitive Advantage

AI readiness isn’t about being “innovative.” It’s about being strategic — and building foundations that let AI deliver real value.

A strong AI readiness assessment helps you:

- Define feasible AI use cases

- Reduce security and compliance risks

- Build trust and adoption

- Create an AI roadmap leadership can support

- Scale AI with measurable ROI

If you want AI to become a reliable operational advantage — not a scattered experiment — start with readiness. Because AI doesn’t reward speed alone. It rewards preparedness.