AI Insider: Latest News & Innovations

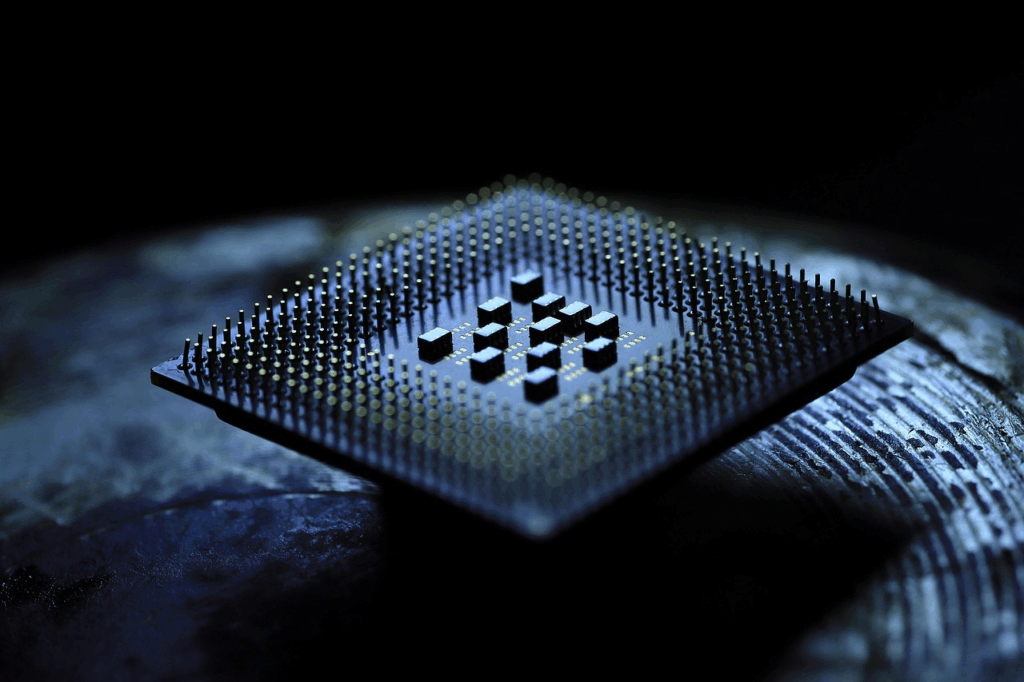

9.1 Nvidia Unveils Blackwell B200: Next-Generation AI Chip to Maintain Lead in AI Computing Race

California, San Jose — Nvidia Corporation, the global leader in artificial intelligence computing, has launched its latest AI accelerator chip, the Blackwell B200, reinforcing its dominance in the rapidly evolving AI hardware market. The announcement comes as competition from industry rivals like AMD, Intel, and various startups continues to intensify.

Breakthrough in Technology

Breakthrough in Technology

The Blackwell B200 represents a significant leap in processing power and energy efficiency, offering up to 1.7 times the performance of its predecessor while consuming 20% less power. This advancement is particularly crucial for data centers, which are grappling with soaring energy costs driven by the exponential growth of AI workloads.

“Nvidia continues to execute flawlessly, and this chip will likely extend their technological lead for at least another 12-18 months,” said Patrick Moorhead, founder of Moor Insights & Strategy. “Even while their rivals are improving, Nvidia’s software ecosystem remains a significant advantage.”

Key Features of the Blackwell B200:

Improved Tensor Cores: Specifically designed to enhance generative AI models.

208 Billion Transistors: Built using TSMC’s 4NP process for optimal performance.

Enhanced Memory Bandwidth: Utilizing the next-generation HBM3e memory.

Fifth-Generation NVLink: Revolutionary transformer engine enhancing on-chip networking capabilities, ideal for transformer-based AI systems.

Energy Efficiency: 20 times more efficient than the previous generation for inference-related workloads.

According to Jensen Huang, CEO and founder of Nvidia, “Blackwell is the most potent chip for generative AI in the world. We’ve completely reimagined the memory subsystem and dramatically increased the chip’s ability to handle the large-scale matrix operations that are central to modern AI systems.”

Implications for the Market

The launch of the Blackwell B200 comes at a time when cloud providers and enterprise clients are adopting increasingly complex AI models that require vast processing power. The AI chip’s improved performance and efficiency are expected to make a significant impact on the industry.

Wall Street responded positively to the announcement, with Nvidia’s shares rising by more than 5% in early trading.

Industry Reaction

Major cloud providers have already expressed strong interest in the Blackwell B200. Representatives from several top cloud platforms confirmed that their AI infrastructure services would be among the first to incorporate the new technology.

The anticipation surrounding Blackwell B200 is driven by its potential to increase performance while reducing power consumption—an ideal combination for large-scale AI workloads like generative AI, large language model training, and inference at scale.

Industry analysts note that the chip’s performance-per-watt advantage could lead to notable improvements in operational efficiency and sustainability across hyper scale cloud environments.

Growing Competition

Despite Nvidia’s market dominance, the competition in the AI chip industry is heating up.

Intel is making progress with its Gaudi processors, acquired through the purchase of Habana Labs.

AMD has recently introduced its MI300 series accelerators, aimed directly at competing with Nvidia’s offerings.

Startups like Cerebras Systems and SambaNova Systems are also developing custom silicon for AI, featuring unique architectures such as wafer-scale technology and reconfiguration dataflow systems.

However, analysts note that Nvidia’s robust software ecosystem—featuring CUDA and various AI libraries and frameworks—remains a significant advantage over its competitors.

Looking Ahead

Nvidia announced that the Blackwell B200 chip will be widely available by the third quarter of 2025, with production expected to ramp up throughout the rest of the year. The company also hinted at future innovations, including specialized CPUs for specific AI workloads and ongoing advancements in networking technology.

The introduction of the Blackwell B200 underscores Nvidia’s commitment to maintaining its leadership in the AI chip market—a sector that has rapidly become one of the most lucrative and strategically important areas in the technology industry.

Table of Contents

9.2 MCP: The Next Evolution in AI – Bridging LLMs and Intelligent Tools

Let’s be honest—talking to AI these days can feel a bit like magic. You ask a question, and within seconds, a response comes back that’s surprisingly accurate, sometimes even witty.

Large Language Models (LLMs) like ChatGPT, Claude, or Gemini have completely changed the way we interact with technology. But for all their brilliance, they still fall short in one very real way: they can’t actually do things.

Sure, they can explain quantum physics or write you a haiku about pizza—but can they send that email for you? Book your next meeting? Read your inbox and give you a quick summary? Not quite.

Sure, they can explain quantum physics or write you a haiku about pizza—but can they send that email for you? Book your next meeting? Read your inbox and give you a quick summary? Not quite.

That’s where the next big leap in AI comes in—and it’s called the Model Context Protocol, or MCP.

Why LLMs Still Need a Helping Hand

LLMs are like really smart librarians. You ask them for info, and they’ll pull the best books off the shelf and summarize them for you in perfect prose. But if you want them to pick up the phone, schedule a doctor’s appointment, or make an Amazon return? They’re stuck.

They can talk. But they can’t act.

And that’s okay—for now. Because the next wave of innovation is already rolling in, and it’s all about connecting these language models to actual tools that do stuff.

So, What Is an MCP?

Think of an MCP like an ‘API’ protocol for your AI assistant. It gives your LLM access to other systems, apps, databases and services. Like giving your smart librarian a smartphone, an email address, the keys to your calendar or more.

Now, instead of just telling you what the weather’s like in Bali, your AI can actually book the flights, send you a packing list, and reserve a hotel—all while you sip your coffee.

Tools like Perplexity (which can search the web in real-time) or ChatGPT with plugins are early examples of this in action. The LLM itself doesn’t magically gain superpowers—but once it’s connected to tools via APIs, it can feel like it does.

Tools like Perplexity (which can search the web in real-time) or ChatGPT with plugins are early examples of this in action. The LLM itself doesn’t magically gain superpowers—but once it’s connected to tools via APIs, it can feel like it does.

Why This Is a Big Deal

This shift—from passive AI to proactive AI—is huge. We’re talking about going from simple conversations to actual productivity.

Here’s what this could mean in real life:

You ask your AI to review a PDF and summarize the key takeaways—done.

You need to schedule a meeting with three colleagues and find a time that works—handled.

You want a weekly digest of your unread emails and a prioritized to-do list—delivered.

Suddenly, AI isn’t just helping you think—it’s helping you act or maybe acting on your behalf.

But We’re Not Quite at Jarvis… Yet

Now, before we all get carried away dreaming about having our own Iron Man-style digital assistant, let’s ground this in reality.

Creating a seamless, reliable system that juggles dozens of tools while protecting your privacy and security is hard. Tools need to be integrated smoothly. The AI needs to know which tool to use, when, and how. And the whole thing has to work consistently, without dropping the ball.

We’re getting closer, but building something that just works across tasks, platforms, and services—every single time—is still a work in progress.

The Bottom Line

MCP tech is a major step towards building a smarter, more actful kind of AI—one that doesn’t just respond with information but actually gets things done.

We’re entering an exciting phase where your AI won’t just be a clever chatbot—it could become your research assistant, your project manager, your inbox triager, your travel agent. The possibilities are wide open.

We’re not quite living in the future we imagined. But with MCP tech, we’re getting a whole lot closer.

9.3 Manus AI: The AI That Actually Gets Things Done

Let’s face it, AI is now everywhere, shaping how we work, communicate, and live. It writes emails, summarizes meetings, and even helps diagnose health issues. But as impressive as these tools can be, they’re not perfect. Sometimes, they sound smart but miss the mark. Why? Because most AI is trained on a firehose of internet data—random, generic, and often outdated.

Now imagine an AI that doesn’t just think; it gets things done. Manus is a general AI agent that bridges minds and actions: it doesn’t just process information, it delivers results. By combining deep understanding with real-world execution, Manus excels at a wide range of tasks in both work and life—handling everything while you rest. It’s not just smarter AI; it’s AI that actually works for you.

The Problem with “Big and Generic”

‘Big language models’ are kind of like straight-A students who’ve read every book in the library but still can’t quite grasp what you need. They know a little about a lot—but not a lot about what actually matters to you.

Manus AI wants to change that by flipping the script.

So, How Does It Work?

Manus starts by understanding what you need. Whether it’s an email, a report, a business decision, or even organizing your personal tasks—Manus interprets your goals using natural language and contextual cues. It doesn’t just react to commands; it reasons through the bigger picture.

Plan the Best Path Forward

Once it understands your intent, Manus creates a plan of action. This includes:

Identifying the best tools or steps to achieve the outcome

Prioritizing tasks

Scheduling or automating workflows when possible

Making informed suggestions based on best practices or user-defined preferences

It’s like having a strategist and executor rolled into one.

Execute Tasks Autonomously

Manus can then carry out tasks independently, from writing and research to task management and communication. It connects with your tools (e.g., email, calendar, documents, CRMs) to complete actions rather than just talk about them.

Examples:

Drafts content and sends emails

Books meetings and blocks calendars

Collects data and generates reports

Interfaces with APIs or platforms to complete work

Real Use Cases, Real Impact

Unlike tools, Manus can collaborate in real time—either with you or across teams. It facilitates shared understanding, decision support, and cross-functional execution without needing constant micromanagement.

This isn’t a theory. Manus AI is already being used in powerful, people-first ways:

Mental health support: Trained with input from therapists and lived experiences, these AI models respond with empathy—not canned lines.

Legal help: AI that explains legal matters in plain English, guided by legal professionals.

Civic engagement: Tools that help people understand government programs and policies, built with help from policy experts and advocates.

These aren’t chatbots that just sound smart. They’re built to actually help.

AI With a Heart (and a Brain)

The magic of Manus AI isn’t just the tech—it’s the people behind it. By integrating diverse insights and perspectives, they’re building AI that’s more inclusive, more accurate, and more grounded in reality.

The Takeaway

The Takeaway

Manus AI isn’t just about smarter technology—it’s about redefining how we work and live. Its end goal is to build a general AI agent that seamlessly connects human intent with real-world execution. By bridging thinking and doing, Manus empowers people to offload complexity, automate routine, and unlock focus for what truly matters . It’s not here to replace you—it’s here to multiply you.

From Demo to Disruption: Manus AI Unveils Agent-Powered Subscription Model

Effect has launched a subscription service, signaling a rapid shift towards commercialization. Following a slick demo video, Manus AI has garnered significant attention, with comparisons to DeepSeek, a service that disrupted Silicon Valley with AI performance comparable to major players like OpenAI and Meta Platforms.

The company is offering tiered pricing, starting at $39 per month, with an advanced $199 option. The latter provides users with the ability to run up to five tasks simultaneously and access more computation credits. Despite its early beta stage, Manus is already monetizing its service, which is built on top of established large language models like Anthropic’s Claude family.

Manus AI aims to do more than responding to the queries and it functions as an agent capable of performing complex tasks. This unique approach moves beyond conventional AI chatbots, offering users a more interactive and practical experience. In addition, the platform will retain a free version with limited access.

The move comes amidst a fierce competition in China’s AI market, where major companies like Alibaba, Tencent, and Baidu have introduced their own AI offerings. Manus, with its aggressive pricing, is positioning itself as a serious contender in the ongoing price war.

Butterfly Effect is also in talks to raise funds, aiming for a $500 million valuation. Its backers include ZhenFund and HSG, formerly Sequoia China.

As Manus continues to attract attention, its AI agent subscription model promises to pave the way for more sophisticated and accessible AI-driven services across various industries.

9.4 Recently Launched: An Amazing New Product (and a Treasure for Moms!)

From Vision to Reality: How GrayCyan Helped Create HITL LovingIs.ai Create an AI That Understands Love

LovingIs.ai is on a mission to teach AI what love truly means. Founded by entrepreneur Jen Loving, the company envisions a future where AI interactions are built on a foundation of love, creating safer and more compassionate digital experiences. But making this vision a reality was no simple task. How do you teach AI to genuinely embody love in a meaningful, actionable way?

LovingIs.ai is on a mission to teach AI what love truly means. Founded by entrepreneur Jen Loving, the company envisions a future where AI interactions are built on a foundation of love, creating safer and more compassionate digital experiences. But making this vision a reality was no simple task. How do you teach AI to genuinely embody love in a meaningful, actionable way?

Before: The Challenges of Building a Love-Centered AI

LovingIs.ai faced several key challenges before finding the right development partner:

Defining ‘Loving’ in an AI Context:

AI models are trained on vast amounts of data but often lack a true understanding of love.

LovingIs.ai needed an AI that went beyond recognizing words or sentiment; it had to respond with a genuine and universal definition of love.

Ensuring the AI-generated responses were consistently kind, empathetic, and aligned with ethical principles was essential.

Finding the Right Development Partner:

Jen and her team approached various developers, but many treated the project as a standard build rather than a mission-driven initiative.

The right team needed to be as committed to the ‘why’ of the project as they were to the ‘how.’

A recommendation led Jen to Nish from GrayCyan, and their approach was refreshingly different — they wanted to understand the purpose behind the technology.

Building AI Compatible Across Platforms:

The AI had to work seamlessly with existing large language models (LLMs) like GPT, Claude, and Google’s Gemini, enhancing and refining their responses.

It required a ‘loving layer’ that ensured compassionate and ethical communication.

Creating an Intuitive User Experience:

Beyond functionality, LovingIs.ai wanted an experience that was visually engaging, warm, and accessible — something that reflected the essence of love.

After: The Transformation with GrayCyan

After partnering with GrayCyan, LovingIs.ai successfully launched its Minimal Lovable Product (MLP) — a term they coined because the product was not just viable but truly lovable. The impact was immediate:

A Fully Functional, Market-Ready AI:

Unlike traditional MVPs, LovingIs.ai’s first product had strong functionality and real-world application from the start.

The AI integrates seamlessly with major LLMs and ensures responses are compassionate, ethical, and grounded in principles of love.

Deep Alignment with the Mission:

GrayCyan’s team didn’t just build the product; they immersed themselves in understanding love.

Through ten 90-minute sessions, they explored cultural, philosophical, and ethical definitions of love to ensure it was authentically embedded in the AI.

Ethical and Thoughtful Prompt Engineering:

The AI applies a love-based filter to all responses, ensuring they are kind, thoughtful, and inclusive.

This ‘loving layer’ helps the AI outperform competitors by providing nuanced and emotionally intelligent responses.

A Beautiful User Experience:

GrayCyan’s design team, led by Shruti, created a logo symbolizing love and safety, which may become a universal emblem for AI systems built with ethical intent.

The interface is sleek, engaging, and promotes trust — all essential qualities for LovingIs.ai’s mission.

Positive Market Reception:

Launched on February 14th — Valentine’s Day — the private beta attracted hundreds of enthusiastic users.

Feedback highlighted LovingIs.ai’s ability to provide a noticeably kinder, safer AI interaction.

The Process: How GrayCyan Brought LovingIs.ai to Life

Immersion in the Mission:

The GrayCyan team participated in training sessions to deeply understand the meaning of love from various perspectives.

Building the Loving Layer:

Instead of creating a new AI model, GrayCyan developed a proprietary ‘loving filter’ that works across existing AI platforms.

Testing and Refinement:

Continuous testing ensured responses were ethically sound and aligned with LovingIs.ai’s standards.

User Experience Design:

The interface was built to be intuitive, visually appealing, and welcoming.

Successful Private Beta:

Early feedback confirmed that the AI provided a more positive and emotionally intelligent experience than traditional systems.

Conclusion: A Partnership Built on Love and Innovation

LovingIs.ai’s journey from concept to reality showcases what’s possible when technology is built with purpose and heart. With GrayCyan as a dedicated partner, LovingIs.ai has not only built an AI but also created a movement toward more compassionate, ethical AI interactions.

Jen Loving’s words say it all:

“This experience has been better than I even anticipated. When I first saw the product, I had tears in my eyes because it was more than I imagined it would be. I would 100% recommend GrayCyan to anyone building an AI or tech product that needs to be deeply aligned with their vision.”

With GrayCyan’s continued support, LovingIs.ai is well on its way to creating a more loving, thoughtful AI ecosystem for everyone.

9.5 DeepSeek Labeled a 'National Treasure' in China: Travel Restrictions, Passport Confiscation, and More

DeepSeek, the Chinese AI startup that recently made headlines for its powerful and cost-efficient R1 model, is reportedly imposing strict measures on its most senior engineers. According to AI journalist Kylie Robison, writing for The Verge, DeepSeek has confiscated the passports of its key employees to prevent potential leaks of sensitive information. This move is part of a broader effort to safeguard proprietary technology and state-related secrets amid escalating international AI rivalry.

A New ‘National Treasure’

DeepSeek’s swift rise to prominence has turned it into what many are now calling a ‘national treasure’ of China. Its R1 model, featuring advanced chatbots, AI-driven content generation, and various productivity tools, quickly soared to the top of all major app stores worldwide, surpassing applications from global tech giants like Microsoft, OpenAI, and Google DeepMind.

Severe Restrictions for DeepSeek Engineers

Under the guise of protecting intellectual property and national interests, DeepSeek has implemented strict travel restrictions on its top engineers. These restrictions include:

Passport Confiscation: Engineers are reportedly required to hand over their passports to prevent unauthorized international travel.

Movement Limitations: Severe travel restrictions have been put in place aimed at containing proprietary knowledge within Chinese borders.

While these measures are designed to prevent sensitive information breaches, they have also drawn widespread criticism over concerns related to employee rights and freedom of movement.

Global Implications

The rise of DeepSeek highlights the increasing tension between technological innovation and national security concerns. As the international race for AI dominance intensifies, the restrictions imposed on DeepSeek’s engineers exemplify the lengths countries are willing to go to protect their technological assets.

DeepSeek’s future remains uncertain as the company continues to face growing scrutiny from global regulators. The question remains whether these protective measures will enhance or hinder its progress in the competitive AI landscape.

9.6 OpenAI Delays Free Image Generation Feature Amid High Demand

OpenAI’s much-anticipated image-generation tool powered by the GPT-4o model has hit a roadblock for free users due to overwhelming demand. On Wednesday, March 26, 2025, CEO Sam Altman announced that the rollout of the free image-creation feature is “regrettably postponed for some time,” without offering a clear timeline for when it will be available.

A Surge in Popularity

The image-generation tool gained massive traction immediately after its release, particularly for creating art inspired by popular styles like Studio Ghibli. Social media platforms were flooded with AI-generated visuals, with the trend even prompting Altman himself to share AI-generated images.

This surge in popularity centered around OpenAI’s DALL·E-powered image generation feature integrated into ChatGPT via the GPT-4o model. Designed to let users generate stunning visuals from simple text prompts, the tool was initially meant to be accessible to everyone, including those on the free tier.

However, the overwhelming demand made it challenging to maintain system stability. As a result, access is currently limited to paid subscribers on the Plus, Pro, and Team plans, leaving free-tier users eagerly waiting.

OpenAI initially intended to make its DALL·E-powered image-generation feature—integrated into ChatGPT via GPT-4o—available to all users, including those on the free tier. It is overwhelming demand after launch made it difficult to maintain system performance and stability. As a result, OpenAI postponed the rollout of this feature for free users. Currently, access is limited to paid subscribers on the Plus, Pro, and Team plans, while those on the free tier will have to wait indefinitely for broader availability.

Technical Challenges and Future Plans

It’s no surprise that OpenAI’s new image-generation tool took off like wildfire. As soon as it launched, people everywhere jumped in to create dreamy, Studio Ghibli-style art and share their AI masterpieces across social media. But as exciting as that was, it also created a bit of a problem behind the curtain.

Turns out, generating images—especially beautiful, detailed ones—takes a lot of computing power. And with millions of users suddenly diving in, OpenAI’s servers were under serious pressure. To keep everything running smoothly for those on paid plans, the company had to hit pause on rolling it out to free-tier users.

This wasn’t about locking out the free crowd—it was about keeping the system stable while they figured out how to scale things up. OpenAI wants everyone to have access eventually, but they need a bit more time to make sure the infrastructure can actually handle it.

As for what’s next? OpenAI is working on beefing up its backend so it can bring this tool to more people—hopefully sooner rather than later. They’re also looking at ways to make the feature even better: more control over styles, smarter prompts, and easier editing. So when it does reach the free tier, it’ll be more polished and more powerful than ever.

The bottom line? OpenAI hasn’t forgotten about free users—they’re just making sure that when you do get the tool, it actually works the way it’s supposed to. Hang tight—it’ll be worth the wait.

What’s Next for Free Users?

Good news: the feature isn’t gone—it’s just on pause for free users.

OpenAI has made it clear that they still plan to roll out the image-generation tool to the free tier eventually. But when it does arrive, it’ll likely come with a few reasonable limitations. Think: daily usage caps or limited prompts per day.

The idea isn’t to restrict creativity, but to make sure the system stays stable and accessible to everyone—without crashing under pressure. It’s all about finding the right balance between making the tool available and keeping it running smoothly.

So, while it might take a little more patience, free users can look forward to trying it out—just with a few guardrails in place to keep things fair and functional for all.

For now, paid subscribers will retain exclusive access to OpenAI’s image-generation tool, while free users await further updates. Though the company has not provided a specific timeline, it’s clear that OpenAI is actively working toward a solution that addresses the growing demand while ensuring platform stability.

9.7 DOGE: How Government Data Could Hand Unprecedented Power to AI

Companies

The Department of Government Efficiency (DOGE) has reportedly sought access to at least seven highly sensitive federal databases, including those managed by the IRS and Social Security Administration. While cybersecurity and privacy are valid concerns, an even bigger worry is being overlooked: if accessed, this kind of data could be used to train large language models (LLMs), possibly even by private AI companies.

Despite assurances from the White House that DOGE data is not being funneled into Elon Musk’s AI projects. Reports reveal that individuals affiliated with the DOGE coalition hold positions in companies owned by Musk, raising concerns about potential data leaks to Musk’s AI venture, xAI.

Even more concerning, employees of the FAA with government email addresses are reportedly also employed by SpaceX, implying a possible data-sharing pipeline to xAI. To add to the suspicion, the xAI Grok chatbot has been vague in denying whether it uses DOGE data for training.

Why Government Data is a Goldmine for AI Development

Government databases are a treasure trove for AI developers due to their unparalleled accuracy, scale, and verified nature. Unlike internet data, which is often curated and may lack real-world verification, government data reflects actual human behavior and decision-making over time.

- Social Security Payments: Offer insights into demographic trends, aging populations, and economic well-being.

Medicare Claims: Provide valuable data on healthcare outcomes, treatment effectiveness, and public health trends.

IRS Records: Highlight changes in income patterns, economic stability, and employment statistics.

IRS Records: Highlight changes in income patterns, economic stability, and employment statistics.

Treasury Data: Maps financial flows and economic impacts, providing a comprehensive picture of societal trends.

The integration of this data could give any AI company a competitive edge by enabling highly accurate predictive analytics and decision-making models. It’s the kind of information that can make or break advancements in healthcare, economics, and societal management.

The Implications Are Staggering

If private AI companies gain access to such comprehensive datasets, it could create a technological monopoly that not only accelerates their capabilities but also creates a powerful imbalance. The concern isn’t just about privacy—it’s about power. When AI systems can predict societal trends, economic shifts, or healthcare outcomes with extraordinary precision, the implications for control and influence are profound.

As the conversation continues about the ethical boundaries of AI training, the question remains: Are we prepared to allow AI companies to gain access to such immense, real-world data sets? And if not, what safeguards are we willing to put in place to prevent it?

Contributor:

Nishkam Batta

Editor-in-Chief – HonestAI Magazine

AI consultant – GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Contributor:

Nishkam Batta

Editor-in-Chief - HonestAI Magazine

AI consultant - GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Unlock the Future of AI -

Free Download Inside.

Get instant access to HonestAI Magazine, packed with real-world insights, expert breakdowns, and actionable strategies to help you stay ahead in the AI revolution.