Crowdsourcing In Action

3.1 Data Labeling & Annotation: The Backbone of AI

Data labeling and annotation are essential steps in machine learning, helping AI models accurately interpret raw data like images, videos, audio, and text. By identifying and categorizing objects within this data, labels enable AI to make predictions and estimations, just like humans recognize distinct features (e.g., a dog’s ears or whiskers). Without proper labeling, training AI is like giving a student an exam without prior lessons.

3.2 Why Data Annotation Matters

1. Teaches AI

Data annotation is how we “teach” AI to recognize and understand the world. By labeling images, text, audio, or video with clear, relevant tags, we’re essentially giving the AI a set of instructions on what to look for. Whether it’s identifying a cat in a photo or detecting sentiment in a review, annotation helps machines learn what’s what.

2. Improves Accuracy

Accurate annotations lead to better outcomes. When data is properly labeled, AI models can make better predictions and decisions. Whether it’s diagnosing a medical condition or translating a language, precision in the training data directly boosts the model’s real-world performance and reliability.

3. Enables Automation

Well-annotated data forms the backbone of intelligent automation. From chatbots that understand context to self-driving cars that detect road signs, annotation turns raw data into structured information, helping AI automate complex tasks and drive smarter, faster decision-making.

3.3 Types of Data Annotation

1. Image Annotation

This involves labeling elements within an image—like people, vehicles, animals, or objects—usually with bounding boxes or segmentation masks. It helps AI models “see” and understand what’s inside an image. For example, it teaches self-driving cars to recognize traffic signs or pedestrians.

2. Video Annotation

Video annotation is like image annotation in motion. It means tagging and tracking objects across multiple frames so that AI can understand movement, behavior, and interactions over time. This is especially useful in applications like surveillance, sports analysis, or autonomous navigation.

3. Audio Annotation

This involves identifying and tagging different types of sounds in an audio file—such as music, speech, background noise, or emotional tone. It also includes speaker identification and transcription. It’s crucial for voice assistants, call center analytics, and even podcast indexing.

4. Text Annotation

Text annotation helps AI understand language by marking things like sentiment (positive/negative), intent (what the user wants), or named entities (people, places, dates). It’s key to making chatbots more conversational, translating languages, or filtering harmful content.

3.4 Applications of Data Annotation

1. Retail and E-commerce

In online retail, annotated data is key to personalizing the customer experience. Product images are tagged to improve visual search capabilities, while user reviews and queries are labeled for intent analysis. This enables smarter product recommendations, virtual try-ons, inventory detection in stores, and AI-driven customer support—enhancing both front-end engagement and back-end operations.

2. Natural Language Processing (NLP):

In the world of language, annotation makes meaning machine-readable. From sentiment analysis—understanding if a review is positive or negative—to text classification, named entity recognition, and chatbot intent detection, annotated language data enables AI to understand grammar, tone, and context. It also plays a huge role in training models for translation and speech-to-text systems.

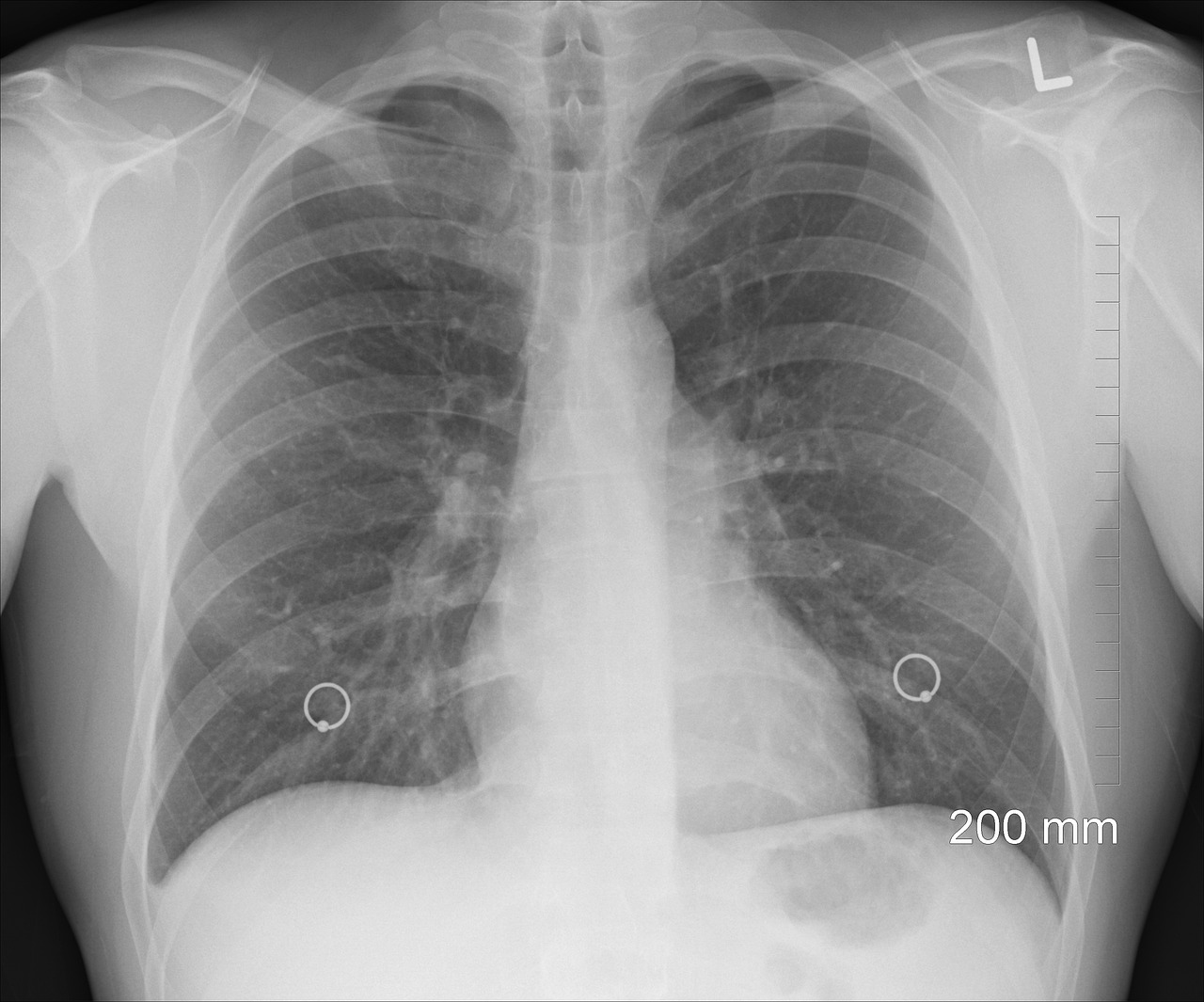

3. Healthcare AI

Data annotation is revolutionizing the medical field by helping machines understand complex clinical data. Labeled medical images (like MRIs and X-rays) enable models to detect anomalies such as tumors or fractures. Annotated electronic health records (EHRs) and pathology reports also help train NLP models to extract insights, predict patient outcomes, and recommend treatments. This accelerates diagnosis and supports doctors with data-backed decisions.

3.5 Data Collection Methods

1. User Participation:

This involves collecting data directly from real-world sources—often through human effort or user participation. A well-known example is Tesla, which gathers vast amounts of driving data from its global fleet. Each vehicle acts as a data point, contributing to the continuous learning of its AI models. Manual collection is powerful because it captures real-life conditions, behaviors, and edge cases that make AI systems more robust and reliable.

2. Open-Source Datasets:

Open-source datasets are publicly available collections of labeled or raw data that developers and researchers can use to train or test AI models. These datasets are incredibly valuable for startups, researchers, and even large tech companies looking to avoid the high costs of proprietary data. Examples include ImageNet (for computer vision), Common Crawl (for NLP), and COCO (for object detection). While cost-effective and accessible, careful curation is needed to ensure quality and diversity.

3. Synthetic Data:

Synthetic data is artificially generated rather than collected from real-world scenarios. It’s especially useful in environments where data is scarce, expensive, or sensitive—such as healthcare or autonomous driving. For instance, developers can simulate thousands of driving situations using 3D models and virtual environments. This method allows for precise control over variables, helping to create balanced datasets and reduce bias while accelerating AI development. Here’s a deeper dive into the applications of synthetic data, along with real-world examples:

Real-World Examples of Synthetic Data Applications:

I ) . Autonomous Driving:

Synthetic data is crucial in training autonomous vehicles (AVs). Real-world driving data can be expensive and difficult to obtain in large quantities, especially for rare or dangerous scenarios like car accidents or extreme weather conditions.

Waymo: Google’s self-driving car project, Waymo, has used synthetic data to simulate various driving environments, traffic patterns, and complex road situations. This allows Waymo to train its AI models on a variety of conditions without having to physically test vehicles in every possible situation.

DeepDrive: A synthetic data generation tool used by car manufacturers to create virtual driving environments for training AV models. By using 3D models and game engines, synthetic data helps AV systems learn to handle scenarios like road construction, night driving, or erratic driver behavior that would be challenging to capture in the real world.

ii) . Healthcare and Medical Imaging:

Synthetic data is playing a transformative role in healthcare by enabling the development of models that require large annotated datasets for training, but where data is limited or difficult to access due to privacy restrictions or cost.

Artificial medical data: In healthcare, synthetic data is being used to generate synthetic medical records, including patient data, medical images, and diagnostic records. These datasets are useful for developing predictive models for patient care, especially in rare diseases or sensitive areas like cancer detection.

Synapse: In medical imaging, synthetic data is used to generate realistic but artificially CT scans, MRIs, and X-rays. These images can then be used to train AI models for early disease detection or for teaching medical professionals. Synthetic medical imaging helps overcome the challenge of limited data for rare conditions or unbalanced datasets, improving diagnostic accuracy.

iii) . Finance:

The financial industry is another sector where synthetic data plays a crucial role in building predictive models without exposing sensitive or personal financial information.

DataRobot: A machine learning platform that uses synthetic financial data to build AI models for predicting stock prices, fraud detection, and customer churn. Synthetic data helps in training models to recognize patterns in transactions without using actual sensitive customer data, ensuring privacy compliance.

Synthetic Credit Scoring: Credit scoring models can be trained using synthetic financial datasets that simulate various customer financial behaviors, from loan repayment patterns to spending habits. By generating realistic but synthetic financial profiles, lenders can predict creditworthiness without breaching privacy or requiring access to real customer data.

iv) . Retail and E-commerce:

Retailers use synthetic data to improve customer experience, enhance product recommendations, and optimize their supply chains.

Virtual Try-Ons: Companies like Zara and Nike use synthetic data to create digital representations of clothing, shoes, and accessories. This allows customers to virtually try on clothes through augmented reality (AR) applications. Synthetic data is used to simulate how clothing fits on various body types, providing more personalized recommendations.

Supply Chain Optimization: Synthetic data is used in the retail sector to simulate customer demand, product availability, and logistical operations. These simulations allow AI systems to predict sales trends, manage stock levels, and optimize distribution routes without having to wait for real-world data, which could take months to gather.

v) . Robotics:

Robots used in manufacturing, warehouse management, or medical applications often need to operate in complex, dynamic environments. Synthetic data helps train these robots to interact with objects, navigate environments, and understand human instructions.

OpenAI Gym: This simulation platform allows developers to generate synthetic data for training AI models in robotics, such as simulating a robot’s interaction with its environment. Data is created by virtually testing robots in various tasks like picking up objects, navigating a space, or assembling parts, which reduces the need for physical testing and accelerates the learning process.

Amazon Robotics: Amazon uses synthetic data to train warehouse robots to optimize picking and sorting. The company generates virtual models of the warehouse environment, where robots learn to navigate, avoid obstacles, and handle packages efficiently, all before being deployed in real-world warehouses.

Synthetic data is reshaping industries by providing cost-effective, secure, and scalable datasets for training AI models. By enabling the creation of diverse, balanced, and privacy-compliant datasets, synthetic data accelerates the development of advanced AI systems in fields like healthcare, autonomous driving, retail, and robotics. As technology evolves, synthetic data is poised to become an even more powerful tool in the AI toolkit, enabling innovation while minimizing ethical and privacy concerns.

3.6 Real-Life Examples

1. Mozilla’s Common Voice:

This is one of the most prominent crowdsourced projects aimed at building open and diverse voice datasets. Mozilla invites people from all over the world to donate their voice by reading predefined sentences in their native languages. This helps in training voice recognition systems that are more inclusive and accurate across different accents, dialects, and speech patterns. It’s a powerful example of how community-driven efforts can shape more democratic and accessible AI tools.

2. Tesla’s Fleet Learning:

Tesla uses real-time driving data collected from its global fleet of vehicles to train and refine its autonomous driving systems. Every time a Tesla car is on the road, it’s gathering data—about road conditions, driving behavior, obstacles, and more. This continuous feedback loop allows Tesla to improve its AI models rapidly and adapt them to real-world scenarios. It’s a groundbreaking approach that showcases how crowdsourced data from users can directly enhance AI performance in safety-critical applications like self-driving cars.

3.7 Expert Knowledge Contribution:

AI systems often rely on expert knowledge to enhance decision-making. Companies like Apple incorporate expert insights to refine AI applications and create knowledge-based.

1. Creative Problem Solving:

Crowdsourcing brings out a global pool of thinkers, innovators, and everyday users who can contribute fresh ideas and unique solutions. From improving chatbot conversations to designing smarter recommendation systems, crowdsourcing brings creativity into AI development. It allows developers to approach problems from multiple angles, drawing on diverse perspectives to uncover unexpected insights or solutions that may not emerge in a closed environment.

2. Algorithm Refinement:

AI systems are only as good as the feedback they receive. Crowdsourcing enables ongoing input from real users, which helps fine-tune algorithms over time. Whether it’s identifying flaws, adjusting outputs, or highlighting edge cases, this continuous feedback loop strengthens AI performance and reliability. It ensures the models are not just efficient, but also practical and user-friendly in real-world settings.

Contributor:

Nishkam Batta

Editor-in-Chief – HonestAI Magazine

AI consultant – GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Contributor:

Nishkam Batta

Editor-in-Chief - HonestAI Magazine

AI consultant - GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Unlock the Future of AI -

Free Download Inside.

Get instant access to HonestAI Magazine, packed with real-world insights, expert breakdowns, and actionable strategies to help you stay ahead in the AI revolution.