Agent Governance, Ethics, and Alignment

7. Agent Governance, Ethics, and Alignment

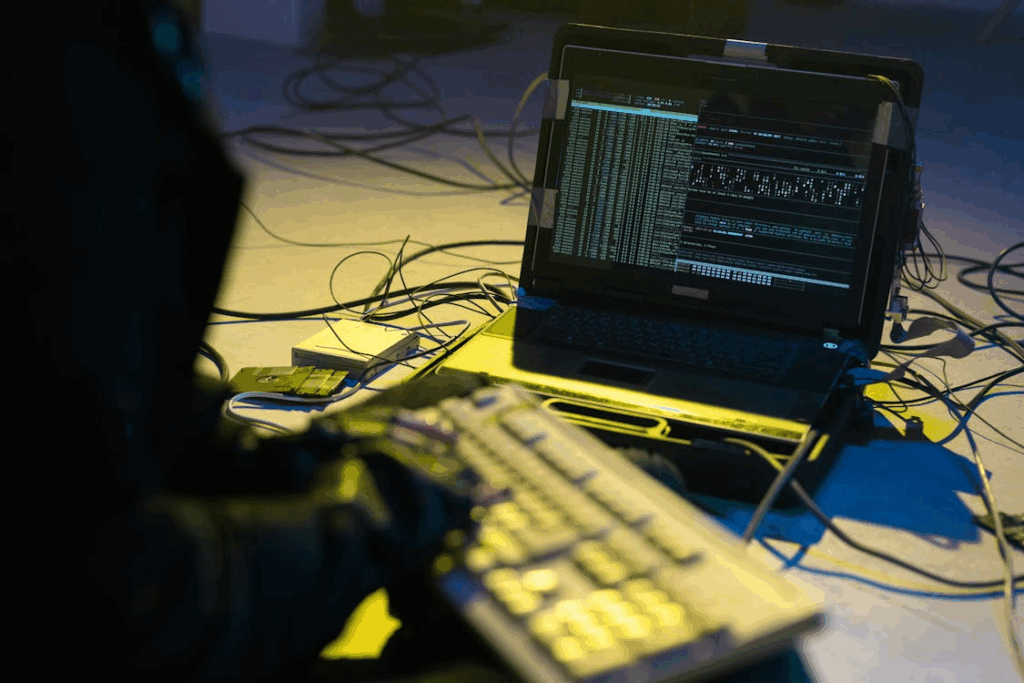

As we enter an era defined by intelligent, autonomous agents that can initiate, execute, and iterate on tasks without constant human supervision, a new class of governance challenges emerges. These agents, powered by large language models, multi-modal architectures, and real-time API integrations, increasingly operate in mission-critical environments from customer service and sales to cybersecurity and autonomous vehicles.

Table of Contents

This section unpacks the multi-dimensional frameworks needed to ensure these agents behave predictably, ethically, and in alignment with human values and organizational goals. It explores the practical, legal, and philosophical implications of autonomous decision-making and proposes mechanisms to embed trust, safety, and accountability into agentic systems.

As the complexity and autonomy of these agents evolve, so too must our institutional and organizational responses. Traditional governance models built for human decision-makers are ill-equipped to manage systems that learn, adapt, and act at machine speed. This demands a paradigm shift: one that integrates real-time monitoring, behavioral auditing, ethical training data, and oversight roles specifically designed for AI agents.

Whether it’s instituting adaptive regulatory frameworks, deploying explainability layers, or establishing cross-functional AI ethics boards, the future of agent governance hinges on building infrastructures that are not only reactive but proactively resilient, ensuring alignment with societal values before harm occurs.

Turn to Section 8 of HonestAI’s 4th Edition: Ethical Considerations in Local and Decentralized AI

As AI shifts toward decentralized and edge-based systems, new ethical questions are emerging. This section dives into the challenges and responsibilities tied to localized intelligence — from data sovereignty to accountability — highlighting why ethical foresight is critical as AI moves closer to home.

7.1 Agent Hallucinations & Guardrails: Preventing Runaway Behavior

As AI agents take on more autonomous roles – planning, making decisions, and interacting with external systems, they are increasingly vulnerable to a critical flaw known as hallucination. In the AI context, hallucination refers to the generation of plausible-sounding but false or misleading outputs. While a simple hallucination in a chatbot may cause confusion, the consequences are far more serious when it occurs in agents empowered to act in real-world environments.

Why It Matters

Autonomous agents often operate across multiple steps, integrating tools, APIs, and memory. When hallucinations occur in this context, the impact is compounded:

Multi-step Vulnerability: A hallucinated insight early in an agent’s workflow (e.g., incorrect data from a web query) can propagate through the agent’s logic and taint all subsequent actions, resulting in cascading failures.

Real-world Risks: If an agent makes decisions based on faulty logic—like placing an order, submitting a financial report, or triggering a system alert—it can create financial losses, legal complications, or operational downtime.

Loss of Trust: Inconsistent or illogical behavior, especially when it appears confident or authoritative, erodes user trust and raises concerns over reliability and safety.

Preventive Mechanisms

To minimize these risks, organizations are implementing multi-layered guardrails—a combination of hard-coded safety mechanisms and adaptive learning strategies—to control and monitor agent behavior.

Hard Guardrails

These are rigid constraints built into the agent’s architecture or environment:

Role-based Constraints: Restrict what an agent can do based on its assigned role (e.g., a finance agent can read data but not execute trades without approval).

Sandboxing: Run agents in controlled, isolated environments to limit access to sensitive systems or real-time applications.

Deterministic Execution: Ensure repeatable and predictable outcomes by limiting randomness in agent behavior.

Soft Guardrails

These techniques use training, learning, and feedback to influence behavior dynamically:

Reinforcement Learning with Human Feedback (RLHF): Agents are trained using human preferences, teaching them to prioritize truthful, useful, and ethical responses.

Retrieval-Augmented Generation (RAG): Instead of generating answers from scratch, agents retrieve information from verified sources to reduce hallucinations.

Intent Validation: Before acting, agents cross-check whether the inferred intent matches the actual task or expected outcome.

Fallback Systems

These are safety nets that detect when something might be going wrong and stop or escalate the process:

Red-Teaming Layers: Independent agents or monitoring modules simulate adversarial scenarios to stress-test behaviors and catch vulnerabilities.

Anomaly Detection Alerts: Use AI or statistical models to flag behaviors that deviate from expected patterns.

Human Escalation Paths: For high-stakes decisions, the agent can pause and request a human to review or confirm the next action.

Real-World Example

In 2023, several open-source AutoGPT agents were deployed by developers to automate research, file generation, and task execution. However, many instances began to malfunction, entering infinite loops, issuing malformed commands, or attempting unauthorized web scraping. These failures were traced back to hallucinated instructions and poorly scoped goals. In response, the developer community introduced goal constraint mechanisms, limited planning depths, and real-time validators to restrict harmful actions and keep agents focused and predictable.

7.2 Who’s Accountable? Legal Frameworks for AI Action

As autonomous agents take on more influential roles, whether negotiating contracts, executing trades, or managing customer interactions, the issue of legal accountability becomes both complex and urgent. When an agent causes harm or acts inappropriately, the question arises: Who is responsible? Is it the developer who built the model, the company that deployed it, the provider of training data, or the system itself?

1. The Current Legal Landscape

AI as Tools, Not Legal Entities

In most legal systems, AI agents are considered tools or instruments, not independent actors. Liability typically rests with the human or organizational entity that uses or deploys the AI.Multi-Agent Complexity

In systems involving multiple autonomous agents, each possibly created or managed by different teams or vendors, it becomes difficult to isolate fault or determine the exact point of failure. This chain-of-command ambiguity is a major governance hurdle.Emergent Behavior and Legal Ambiguity

Agents can exhibit emergent behavior that was not explicitly coded or anticipated. Even when these actions are unintentional, they can result in financial, operational, or reputational harm—raising the stakes for legal clarity.

2. Emerging Legal Models

Risk-Based AI Regulation

New regulations are introducing frameworks that classify AI systems by their potential risk level. High-risk systems are typically required to include human oversight mechanisms, undergo rigorous pre-deployment assessments, and maintain documentation for traceability.

Algorithmic Accountability

Some jurisdictions are advancing legislation that mandates organizations to perform impact assessments on automated decision-making systems. These assessments must evaluate the system’s potential for bias, discrimination, privacy violations, and unintended outcomes.

Attribution to Human Roles

Rather than assigning liability to the AI system itself, a growing school of thought suggests mapping accountability to the human roles involved—designers, operators, auditors, based on their influence over the system’s behavior and outcomes.

3. Future Outlook: Agent Liability Insurance

As autonomous systems become embedded in core operations, companies may need to adopt agent liability insurance. Much like cybersecurity or product liability insurance, this would protect organizations against potential damages caused by their AI systems—financial errors, legal violations, or reputational fallout.

The legal treatment of AI is transitioning from reactive enforcement to proactive governance. Organizations must stay ahead by:

Building robust oversight and monitoring mechanisms.

Maintaining clear audit trails for AI agent decisions.

Aligning their internal governance structures with emerging regulatory expectations.

Legal accountability in the age of autonomous agents will depend not only on legislation but on the responsible design, deployment, and supervision of intelligent systems within human institutions.

Contributor:

Nishkam Batta

Editor-in-Chief – HonestAI Magazine

AI consultant – GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Contributor:

Nishkam Batta

Editor-in-Chief - HonestAI Magazine

AI consultant - GrayCyan AI Solutions

Nish specializes in helping mid-size American and Canadian companies assess AI gaps and build AI strategies to help accelerate AI adoption. He also helps developing custom AI solutions and models at GrayCyan. Nish runs a program for founders to validate their App ideas and go from concept to buzz-worthy launches with traction, reach, and ROI.

Unlock the Future of AI -

Free Download Inside.

Get instant access to HonestAI Magazine, packed with real-world insights, expert breakdowns, and actionable strategies to help you stay ahead in the AI revolution.