The idea that technology is neutral is a myth. Every dataset tells a story—not just of numbers, but of people, choices, and often, blind spots. Recognizing bias in data isn’t just about fixing code—it’s about restoring human dignity in systems that increasingly shape how we live, work, and are treated.

1. Collect Data That Reflects Real People—All of Them

Many AI systems are built using data from a narrow slice of humanity, typically Western, white, male, and English-speaking. But the world is so much more diverse than that. When we fail to include voices from different genders, ethnicities, regions, and languages, we train systems to ignore or misunderstand millions of people.

Imagine a voice assistant that can’t understand your accent—or a medical AI that misses a diagnosis because your skin tone wasn’t in the training data. That’s not innovation; that’s exclusion.

2. Audit Often, With Empathy

Checking for fairness isn’t a one-time task; it’s a continuous act of care. Models should be regularly tested for how they perform across various groups, especially the most vulnerable. It’s not just about catching errors—it’s about listening, adapting, and doing better every time.

Would you trust a doctor who never updated their knowledge? Then why trust an AI that was never re-evaluated?

3. Label With Care and Cultural Sensitivity

Behind every label—“happy,” “angry,” “threatening,” “beautiful”—is a person making a judgment. If the labeling team lacks cultural diversity or context, those judgments can become distorted and harmful.

Think of two people from different cultures reacting to the same event—one laughs, the other stays silent. Should an algorithm label one as “engaged” and the other as “disinterested”? Labels must be informed, not imposed.

4. Tell the Story Behind the Data

Too often, datasets are treated like black boxes: no explanation of where the data came from, who collected it, or under what conditions. That’s a problem. Transparency isn’t just a technical requirement; it’s a moral obligation. People deserve to know what they’re being judged by.

You wouldn’t want a teacher grading your paper without knowing the rubric. Why should AI work that way?

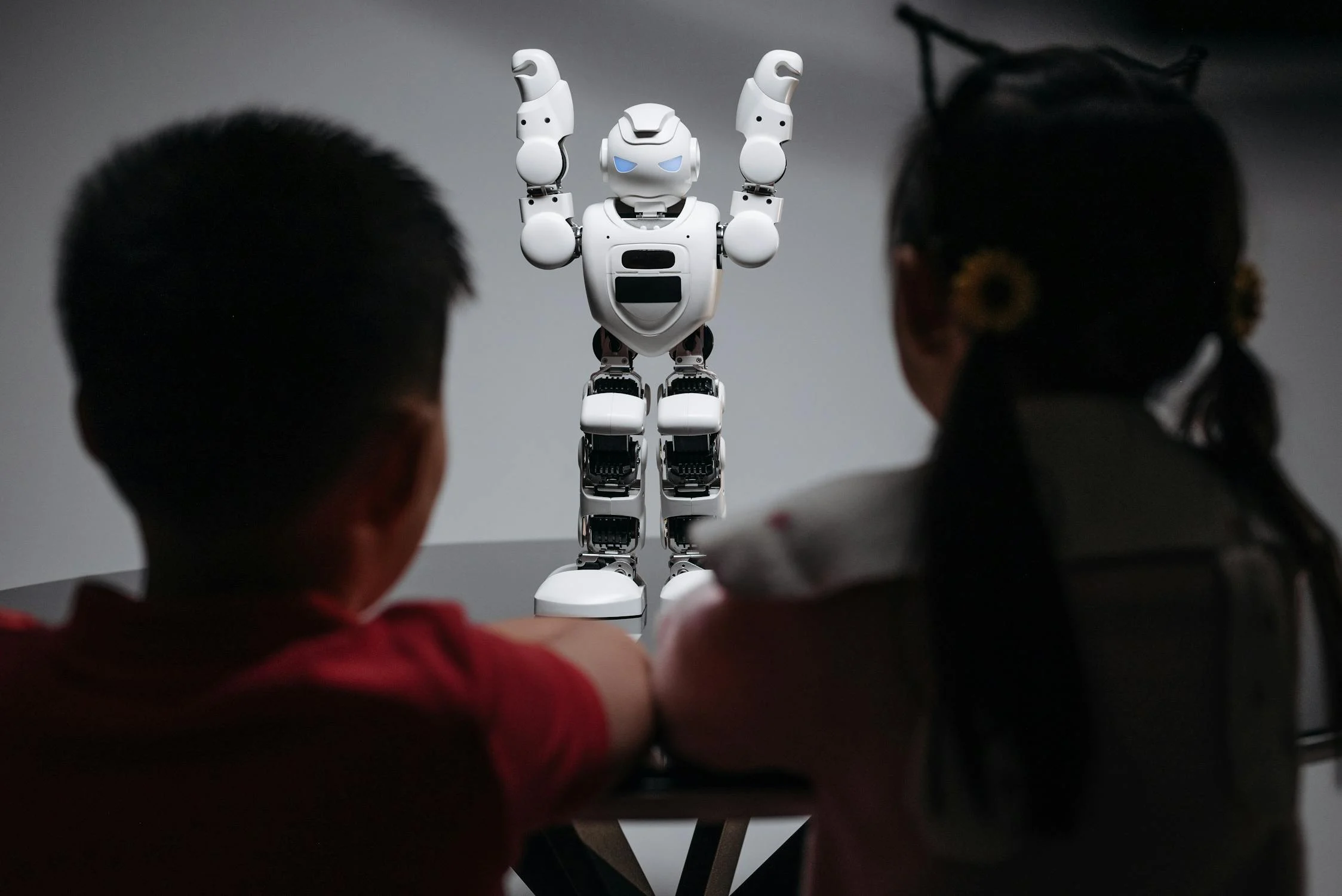

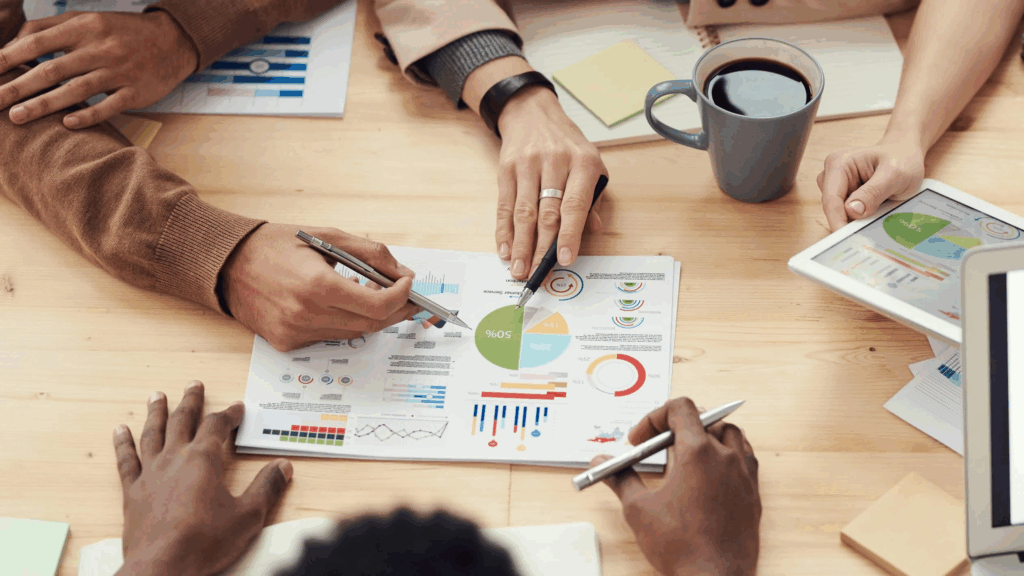

5. Design With, Not Just For, Communities

Instead of building models in labs and releasing them into the wild, why not co-create with the people who will be most affected? Involve real users—especially from marginalized communities—early in the process. Let their lived experiences guide the development of ethical, effective AI.

If AI is going to shape our lives, then everyone deserves a seat at the design table—not just the engineers.

Final Thought

Responsible AI starts with responsible humans.

It’s not enough to say “the data made me do it.” Every choice—from how we collect data to how we audit it—reflects our values. If we want AI to serve humanity, we have to start by seeing and valuing all of humanity in the datasets we build it on.

Unlock the Future of AI -

Free Download Inside.

Get instant access to HonestAI Magazine, packed with real-world insights, expert breakdowns, and actionable strategies to help you stay ahead in the AI revolution.